Sound design and ambisonic mixing for vr application – virtual diver

Virtual Diver is an innovative research project created by a consortium of IT companies under the supervision of the National and Kapodistrian University of Athens. The application is designed and developed as a complex digital and interactive platform, which, in its final form, will offer the opportunity to virtually tour the island of Santorini utilising 360-Video, and techniques of Virtual and Augmented Reality. The final product will be available for different devices such as tablets, phones, virtual reality headsets, etc. My job here is to do the sound design and perform ambisonic mixing for all the different media with the aim of creating an immersive experience for the user. At a later stage, I will be working with Unity to implement the interactive sound on the Virtual Reality components of the project.

This project is currently in development and will be completed by the end of 2021. I am using Cubase 10.5 to perform 1st-order ambisonic mixing making the file compatible with 360-video. For any queries regarding this project do not hesitate to contact me.

Masters Thesis: A Methodological Approach to the Measurement and Analysis of Forest Acoustics

For my end of year research project I studied the way in which sound behaves inside a forest. As part of my research I studied the sound reflections taking place in the surface of a maple trunk inside an anechoic chamber. The measurements obtained were compared to the theoretical values of the scattering from a cylinder which were previously used by other forest reverberation algorithms. All the analysis and implementation of this project was done using MATLAB.

The result of this research showed that the scattering of sound from a tree happens in a similar way to that from a perfectly reflective cylinder. Forest reverberation algorithms could be significantly improved by creating additional filters responsible for all the other effects taking place as sound comes in contact with the trunk such as absorption or diffraction.

This project was supervised by Dr. Frank Stevens and Dr. Jude Brereton. All equipment and software used was provided by the Department of Electronic Engineering and the AudioLab of the University of York. The report of the thesis can be found on the link below:

The Acoustics of the Parthenon

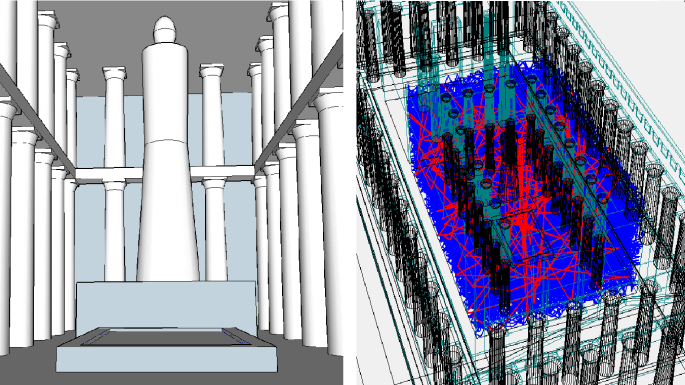

As part of this project I created a model of the Parthenon, the main temple of the Acropolis of Athens as it was built almost 2,500 years ago. The model was then imported into ODEON: Room Acoustic Software to conduct acoustic measurements of both the interior and exterior of the temple and to produce Impulse Responses. Overall, this study showed that the main room of the temple was extremely reverberant even when the door was open.

The model was implemented on SketchUp at a high degree of accuracy using the original architectural plans of the building. The Impulse Responses obtained were analysed on MATLAB using their RT60 and were then used to perform auralisations with different instruments recorded inside an anechoic chamber.

This project was supervised by Dr. Gavin Kearney. All equipment and software used was provided by the Department of Electronic Engineering and the AudioLab of the University of York. Some audio examples created can be found below:

Relative Voice Tuner

I used an Arduino Uno board to create a tuner for voice using relative pitch. The user would press a button to generate a new note. This note would be played using a voice synthesiser. The user would then try and sing the exact same pitch using their voice. The LEDs of the interface would indicate how close the pitch of the user’s voice is to that of the sample. A noise gate and an octave shifter were also built in order to to improve the performance of the tuner.

The interface built used serial communication to transfer information between the Arduino and PD extended. All signal processing was done within the PD Patch, while the Arduino was responsible for adjusting the analogue and digital inputs and outputs.

This project was supervised by Professor Andy Hunt and Dr. Jude Brereton. All Software used was free, the Arduino board was purchased while the additional hardware was provided by the Department of Electronic Engineering of the University of York. The video below shows a demonstration of the interface:

Acoustic Survey: York Grand Opera House

On the 27th of February 2019 Adrià Martínes Cassorla, Ben Lee, Bernardas Pikutis and myself, conducted an acoustic survey inside York’s Grand Opera House. Our aim was to capture how the room sounded at different audience positions on all three floors. One source was placed on the centre of the stage and one on the centre of the orchestra pit underneath the stage. An ambisonic microphone was used in order to provide spatial information about the sound arriving in each position. Two positions were measured on the stalls, two on the dress circle, one on the grand circle and two on the boxes.

The Impulse Responses obtained were convolved with anechoic opera recordings taken from the Università di Bologna. The source on the centre of the stage was convolved with the voice, while all the other instruments were convolved with the source on the orchestra pit. If there was more time, the project would have obtained two different source positions on the orchestra pit, which would significantly improve the projection of sound.

This project was supervised by Dr. Gavin Kearney. All equipment and software used was provided by the Department of Electronic Engineering and the AudioLab of the University of York. Special thanks to Mark Waters for lending as the space to conduct the measurements, as well as for his friendly attitude and excitement throughout. Auralisations of the Impulse Responses with the anechoic opera can be found below:

Binaural Exercise with MATLAB

As part of this project I used Head Related Transfer Functions (HRTFs) to create a soundscape. The setting was a person standing between two roads waiting to cross. While waiting, different sounds move around the listener towards different directions. At some point the traffic light turns red and the person crosses to the other side.

This project was created using MATLAB and its purpose was to familiarise us with Impulse Responses, convolution, digital filters and perception of directional sound. All the sounds used were taken from FreeSound and were edited using Cubase 5.2. All movement was adjusted using MATLAB and HRTFs, using windowing to create smoother transitions between the IRs.

This project was supervised by Dr. Frank Stevens. All equipment and software used was provided by the Department of Electronic Engineering and the AudioLab of the University of York. The audio file of the soundscape can be found below:

Voice Synthesis: Formant Synthesis

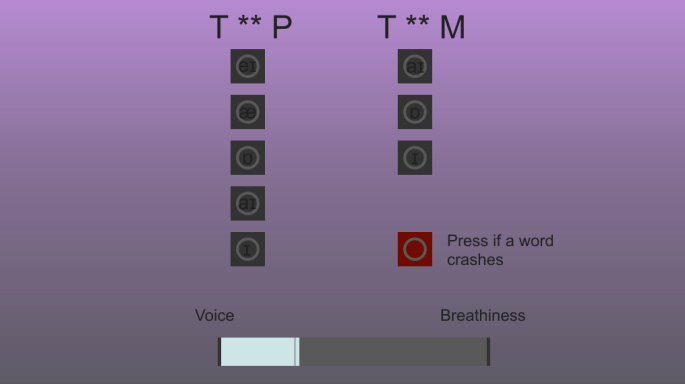

I used MAX MSP to create a voice synthesiser using formant synthesis. The aim of this project was to synthesise similar words consisting of the same consonants but different vowels each time. The purpose was to compare how different vowels change the way consonants sound.

This was achieved by primarily recording different monophthongs, diphthongs, consonants as well as entire words, and analysing them using Praat. During the analysis, information was derived about the behaviour of the different formants over time. Then, using MAX MSP, a patcher was created which used a sawtooth wave mixed with filtered white noise acting as the source, followed by five band-pass filters mimicking the behaviour of formants. That alone created vowels and some voice consonants. Finally, white noise was used to create plosive consonants and add breathiness to the synthesised voice.

This project was supervised by Dr. Helena Daffern. All equipment and software used was provided by the Department of Electronic Engineering and the AudioLab of the University of York. I am currently creating a GitHub library to upload some of my projects including this MAX Patcher for the Voice Synthesiser.

Psychoacoustic Test: The Shepard’s Tone

I used Pure Data (PD Extended) to investigates the illusion known as the Shepard’s Tone. The patcher created changed different characteristics such as the number of harmonics, the speed and the direction of shift of the tone. The purpose of this project was to examine how each one of these parameters affected the effect of the illusion by rating each tone between 1 and 5.

The patcher created is responsible for synthesising the Shepard’s Tone changing all the appropriate parameters as well as creating a listening test and printing the results into a .txt file. The user is able to complete the test and listen to all 32 tones using the main patcher which sends messages to all the sound engines of the program to create the appropriate tone and store the appropriate information received by the user.

This project was supervised by Dr. Frank Stevens. This project was created entirely using Pure Data which is a free software. I am currently creating a GitHub library to upload some of my projects including this PD Patcher for the Shepard’s Tone.

Undergraduate Dissertation: Pitch Tracking and Voice to MIDI

For my end of year research project of my Bachelor’s degree I investigated the process of pitch tracking from a singing voice. I chose this project as I was interested in how voice can be thought of as an instrument that everyone possess and is familiar with. If one’s voice could then be converted into MIDI using a mobile application then everyone would be able to compose music.

For this project I used Swift and Objective C to create an interactive application for iOS devices. The user would sing into the device’s microphone. The voice signal would be scanned using pitch and amplitude trackers returning the corresponding MIDI notes. The notes of the melody could also be played through an oscillator. While the code for pitch tracking was provided by AudioKit, a framework compatible with Swift, the project focused on improving the performance of the tracker by implementing complimentary filters and other effects to obtain more accurate values.

This project was supervised by Professor Andy Hunt and Dr. Stuart Porter. All equipment and software used was provided by the Department of Electronic Engineering and the AudioLab of the University of York.